We’ve discussed our approach to dev ops before, specifically how we use CDK with Python in our weather stack deployment.

It’s something we like to talk about, so let’s do an update!

Weather Stack Background

Compared to most of our competitors, we don’t have just one weather data processing system; we have multiple. It’s getting really interesting.

A bit of terminology: In dev ops parlance, a stack is a set of machines, storage, networking, and other logic you can stand up or tear down as a self-contained unit. We use AWS, so we’re referring to a CloudFormation Stack.

We also build our own weather stacks, one per customer—or at least that’s how it started.

One Big Weather Stack per Customer

Initially, we deployed a giant stack for each customer. The parts that ingested weather model data processed it for display and served it to the front ends were all in a single stack. We always suspected it would grow unwieldy, but the part that broke first was interesting.

It was AWS’ Cloud Development Kit that broke first. It can only handle a certain level of complexity in a stack deployment. At least it couldn’t handle what we were up to. We found ourselves chasing down errors that ended up in race conditions or weird workarounds online.

That’s not great, but we still liked CDK, so what to do?

Part One: A Weather Stack Deployment per Model

We knew this approach would become too large, so we removed the most obvious part. The model import and processing now run in separate stacks, one per model.

If you’re unfamiliar with weather models, they’re a bit like this: You have an incoming data set, like GFS. It’s published every so often, 6 hours for GFS, and it tends to trickle in over time. Not everything is a model; some are observations, but we treat those similarly.

Sometimes, you have to scrape an FTP site for updates; sometimes, they come to you in notifications. Often, you can process a single time slice at a time; sometimes, you can’t. Every model is a little different, but the basics are the same. Data comes in, and you process it as fast as possible and pass it on for display or query.

We process a range of models, including GFS, HRRR, and many more, and a bunch that our customers buy. Each of those is now running in its own stack, which has been great.

First, it makes development easier. We add or modify a model, deploy the stack, and poke at it to see how it’s doing. Since that new model is isolated from everything else, it’s easy to experiment.

Second, it’s great for versioning. Users can still look at the old model stack while we get the new one going. It sure beats the scramble when you set everything up at once.

Part Two: A Weather Stack Deployment per Customer

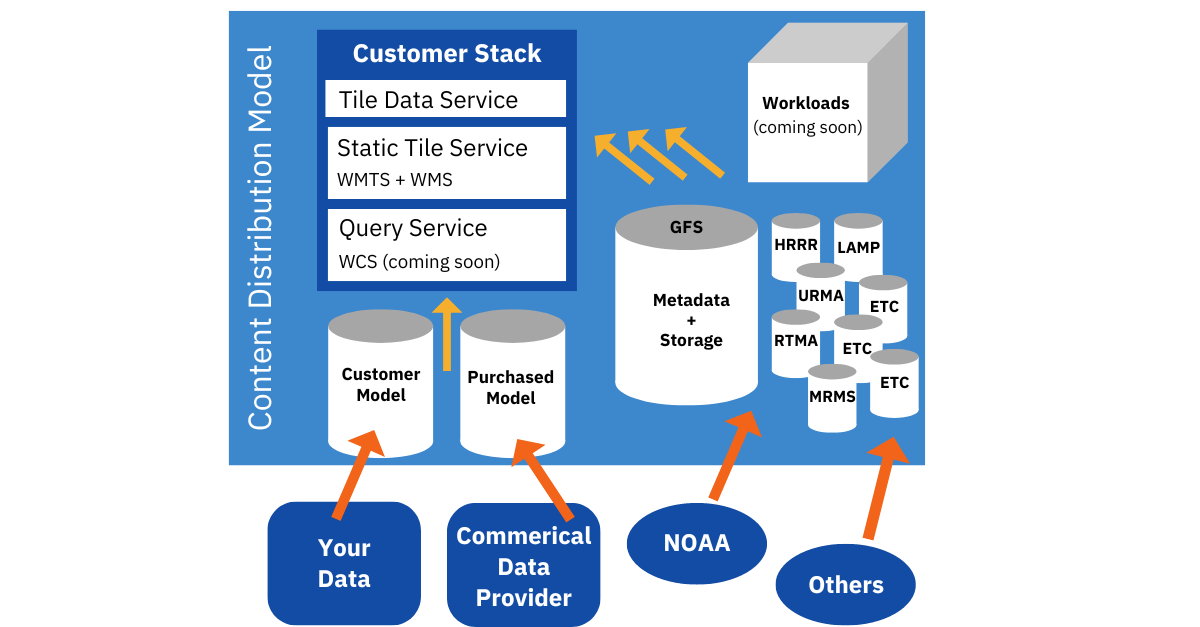

We still discuss one weather stack per customer, so you may wonder how that works in the new regime. It’s pretty simple: the customer stacks point to the model stacks.

Customer stacks are now focused on data distribution and query. They handle Terrier’s data requests and the new WMTS and WMS functionality. They’ll take on WCS when that comes out, too.

Thus, we can scale these customer-facing stacks up or down as required. We can even have multiple versions for testing; indeed, we’ll usually have a dev and a prod version available as we add new functionality.

A customer stack can only see the models the customer uses (and pays for). Licensing gets complex in weather, so we keep everything isolated.

Updates and Fixes

For a functionality update or minor bug fix to a model importer, we’ll spin up a new stack for the latest model. When satisfied, we’ll fire up a new customer stack, attach it to their dev endpoint, and let the customer use it.

When everyone is happy with the new model, we switch from dev to prod and are done. We can even deploy a dev stack on Friday because it’s just a dev stack.

If something catches fire, we *can* force an update to an existing stack. For example, suppose a NOAA endpoint falls over <cough>. In that case, we can change where that stack looks or even force a container replacement for the vital part.

We track it all in git, so it’s easy to roll back, update the failing thing, and force a deployment. We rarely have to do this.

Stacks Upon Stacks

Stacks pointing to other stacks can get complex, so we also track that in git. At any given moment, we can see which versions of the models are in use by which customer, for dev or prod, and update accordingly.

The customer-facing stacks come up quickly and are straightforward to scale if needed. Moving the model data out proved to be a boon to sanity.

Thus, our solution to the CDK complexity problem was to break our systems into more pieces. That turned frustrating problems into a pleasant and quick deployment process.