Running a weather company in the cloud means processing massive amounts of data every hour. Our software engineers write our importers, and more recently, our atmospheric scientist writes the real weather code. But after our AWS Lambda costs spiraled out of control, we migrated to Elastic Container Service (ECS) with Fargate and discovered that effective Fargate container management is the difference between a sustainable business and a budget nightmare. As the CEO handling deployment, I’ve learned these lessons the hard way.

Acting Like a DevOps Professional

When we started the company, one of my goals was to do everything in the cloud and make it all DevOps compatible. What that means is vague, but I’d boil it down to:

- Everything is containerized

- No server is sacred

- Restarts and scaling are automatic (within reason)

- Old services are replaced, not upgraded

When I want to do something dodgy, I ask myself, ‘Would a professional do this?’ and then I usually do not do it. If you’re a CS professional, you’re thinking, ‘well obviously,’ or maybe, ‘well obviously we should be doing that, and I wish we could.’

We’ve had the luxury of writing it all from scratch, and we’ve learned a great deal as we’ve progressed. Along the way, we’ve discovered that effective Fargate container management is key to keeping our costs reasonable while maintaining reliability.

Lambdas are Great, but Expensive

Most of our data processing is importers. We take data from various models and transform it into rapidly displayable and queryable data. For some data sets, such as a radar frame, that’s relatively simple. For large models, like HRRR, it can take a while, as our users increasingly demand more levels and variables.

We initially used AWS Lambdas, but they became too unwieldy and expensive for large models with numerous hours, variables, and levels. Sometimes a process would even exceed the 15-minute limit. When that happens, it’s something we need to address, but it’s nicer to have it be an optimization rather than an emergency.

Effective Fargate container management has become essential to maintaining both reliable and cost-effective operations. For everything except radar and lightning, we are now running our importers in ECS clusters with Fargate. This led to some interesting scaling problems, mostly scaling down.

The Perfect Container, or at Least Good Enough

The beauty of Lambda is that it does most of the work for you. Its rules are simple, and implementation is even more so. Your logic is called, you do a thing, and exit. If you take too long or run out of resources, AWS kills your container and tries again. Threading can be useful, but multiple processes are less so. It’s a one-and-done sort of approach.

With ECS, things get much more complicated. We’re using Fargate, which simplifies things a bit and isn’t so much more expensive that it’s an issue for us. However, with Fargate, you must decide how much of a virtual machine you need for a task. You can request a larger number of smaller instances, but we’ve found it to be more cost-effective to request a smaller number of larger ones.

We’ll run multiple processors in Python, slightly more than the number of virtual CPUs, and that seems to be closer to an optimal balance of resources and cost. But it makes the process logic more complicated.

Scaling Up Isn’t Too Hard

We process a variety of different weather models and real-time data, which have very different cadences. Some come in every couple of minutes and are small, like lightning and radar data, so we still use Lambdas for those. Others are big, but very predictable, like the GFS.

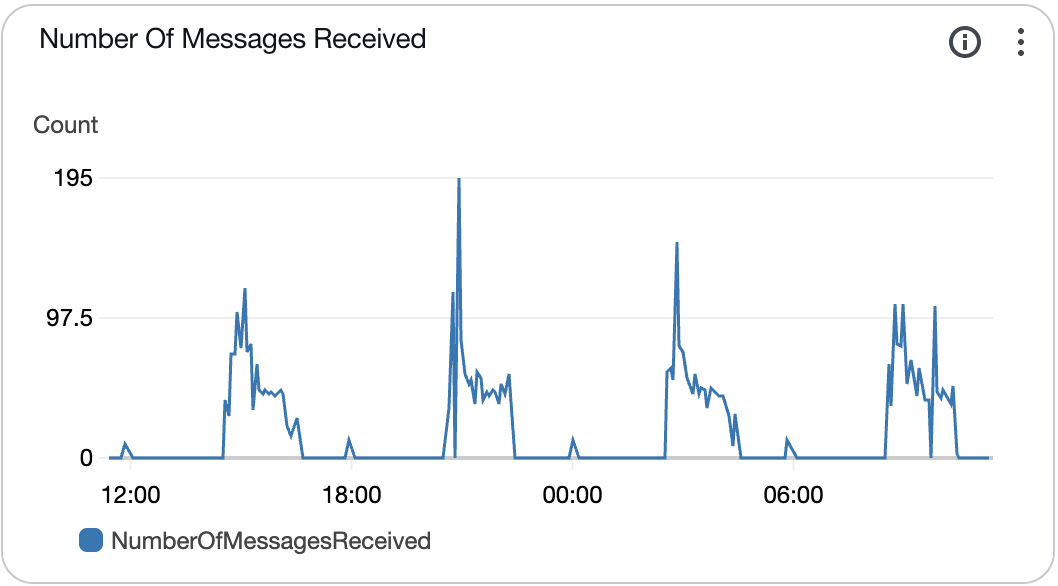

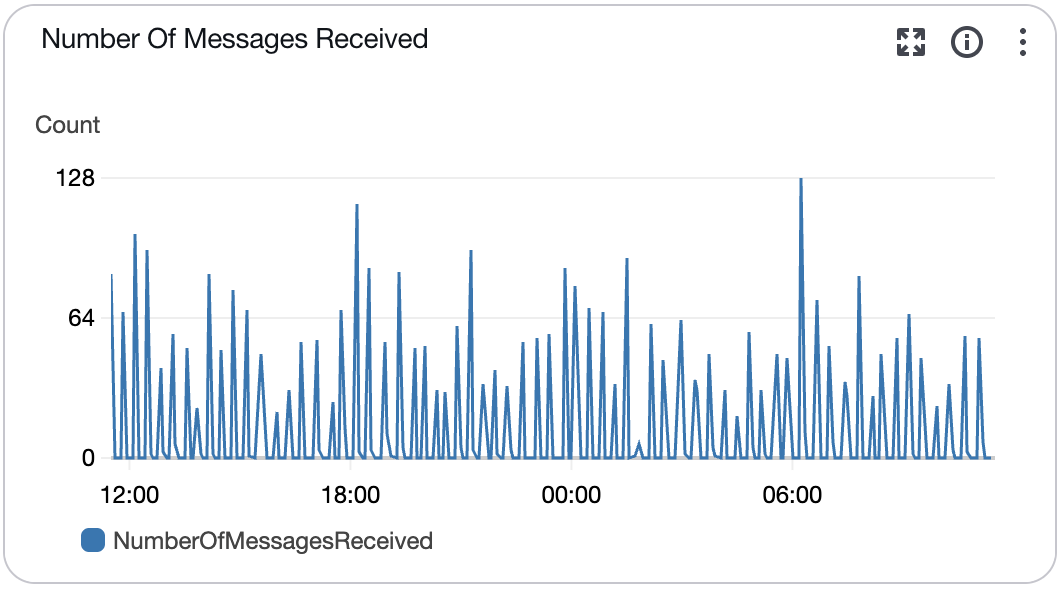

Examining one of our GFS queues reveals the pattern. It runs every 6 hours. We start getting data a while after that, over the next couple of hours, and then we’re basically done.

Scaling up for this is simple enough. CloudWatch monitors the queue, and when it detects messages piling up, ECS spins up a new, relatively beefy instance. We know we’re getting a lot of messages at once, so we might as well throw some weight at the problem. Our import logic runs multiple processes in Python, which does work if you’re careful.

That’s great for GFS, but it becomes more challenging for a sub-hourly model like HRRR, which has many different components. It’s more chaotic.

There’s still a regular pattern here with spikes in activity. What we can’t see is the cost of those messages. Some are sub-hourly surface variables and very fast to process. Some are sigma-level data, which takes us much longer. Our customers use all of it in one form or another, so this can take a while as a group, even if individual time slices are relatively quick.

To make it more complicated, there’s a burst of activity at the end of each model run as we reorganize the data by time to make fast variable lookup… well, fast.

As with GFS, we scale up based on the number of messages in the queue. That part works well.

The Challenge of Fargate Container Management: Scaling Down

What we really want to avoid is paying for instances that are doing nothing. With AWS, it’s always been an issue that bothers me. It’s just too easy to spin things up and harder to get rid of them once you do.

ECS does offer some good mechanisms, but it took some time to use them correctly in our containers. We started out with a fairly blunt approach, setting the ECS_CONTAINER_STOP_TIMEOUT to a large value, basically demanding ECS not shut us down. That was costly.

We then moved to the task-protection API, which let us get much more granular with our importers. We can tell ECS when we’re working and for how long. We can then clear the protection status when an importer is out of work. That was better, but not quite there, and it took a lot of tinkering to get it right.

For our work, there’s a tension between the number of virtual CPUs in a Fargate instance and how quickly we can shut it down. We might have 8 vCPUs and, let’s say, 10 processes potentially going. If there’s still 1 process working in there, we can’t let the container end. If there are 8 imports spread across 8 instances, that’s a terrible use of resources.

To work around this problem, we allow instances that are already busy to request new tasks more frequently, thereby potentially starving instances that aren’t doing much. It’s improved things somewhat and helps reduce our costs further, but we still have more work to do.

A Note About Filtering

We’re watching S3 buckets for our input data in all cases. Either we’re watching a NOAA bucket containing model data, or we’ve got a bucket of our own where we deposit scraped weather data.

That’s a fine mechanism, watching new file creation, but it’s a bit noisy. One model slice file might result in a couple of different files, and we don’t use all the model data in every case.

When using a Lambda for an importer, a wasted call wasn’t very expensive. We can look at the filename and discard it. With a queue that functions poorly, ECS scales based on the number of messages in the queue; however, this number does not accurately reflect the workload.

The solution was a simple Python-based filter program that takes a regular expression as input. I’m a fan of this regular expression site for testing. Lambda handles Python code directly, making it a very simple and inexpensive operation that doesn’t factor into our costs. Handling only real inputs made our scaling logic much simpler.

Lessons Learned with Fargate Container Management

Our work on AWS with cloud-based weather processing (pun intended) is not terribly novel or radical. That’s kind of the point. We’re using reliable components in intelligent ways to process weather data quickly and efficiently. We pay the extra cost of the cloud to ensure reliability, and we’ve been rewarded with emergency-free nights and weekends.

There are other mechanisms to deal with scaling in and out, and I imagine some would say “Kubernetes” as well. Perhaps someday, but for now, we’re balancing AWS cost with available expertise and finding a workable middle ground. I find these use cases interesting when I read about them, so I hope others do as well.