We recently enhanced our geospatial capabilities by implementing a cloud-based WCS in our Labrador rollout. This modern approach combines the power of cloud infrastructure with established OGC standards, delivering scalable access to geospatial data. Let’s explore how our cloud-based WCS implementation works and why it matters for modern data services.

Understanding Cloud-Based WCS

WCS is an OGC standard for fetching bits and pieces of geospatial data. While similar to Web Mapping Service (WMS), it offers more sophisticated capabilities and improved architecture. Our cloud-based WCS implementation furthers these advantages, leveraging cloud infrastructure for enhanced scalability.

As part of our Boxer 2 initiative, we’ve been opening up the data we display for queries. The new WCS service is part of that, and it’s built on our Labrador Python library.

What Does WCS Do?

Being a more modern OGC standard, it has a lot of knowledge from earlier standards. One of the big ones is how it breaks down its requests:

- GetCapabilities – A high-level overview of available data sets

- DescribeCoverage – Specific information about a data set

- GetCoverage – Fetch data from a very specific data set

With weather data, we can have a lot of individual data sets (time slices), and exposing them via the top-level call can get expensive. This was always a problem with WMS and even WMTS. Do you want to know everything we have? Prepare for a 100MB of XML!

That brings up one drawback. WCS uses XML, and XML is…. tiring to work with. But hey, at least it’s all standard.

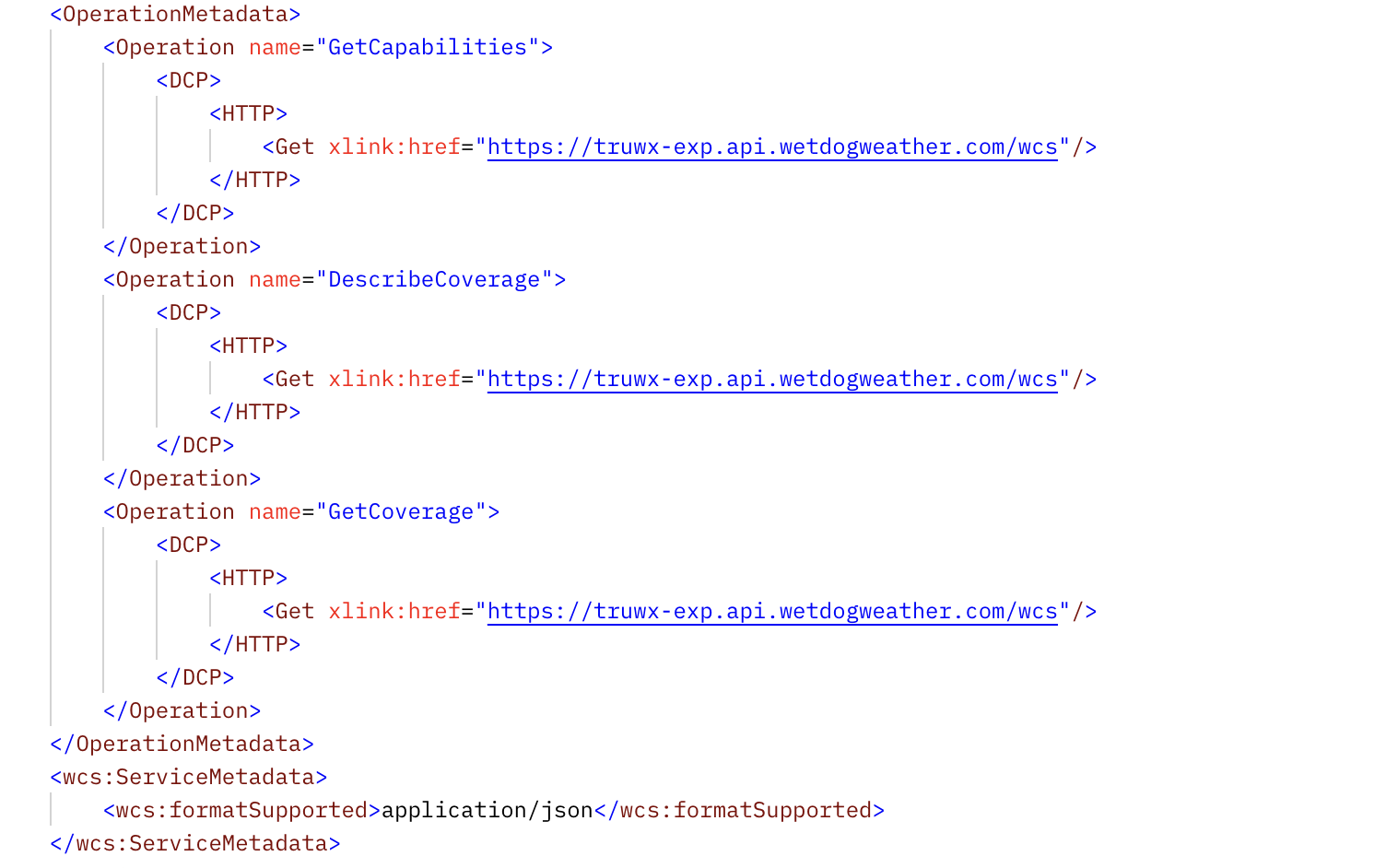

WCS: Get Capabilities

Interacting with WCS is a conversation that starts with the GetCapabilities call to see what the service has. The return starts out with a bit of small talk that no one pays attention to.

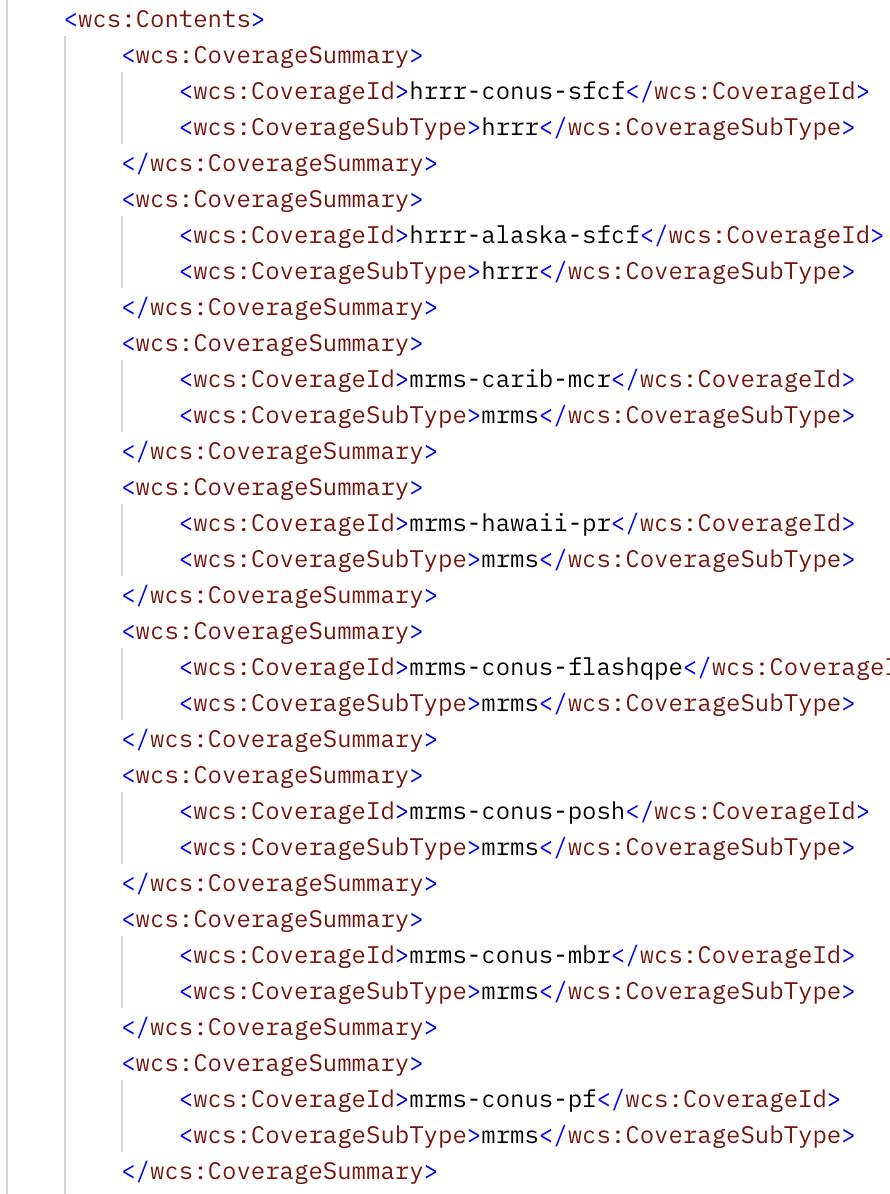

Then we get to the good stuff. What do we actually have? Here’s a subset.

This can go on for a while, but bear with it, as the WCS server is proud of itself. Also XML.

For us, the coverage IDs are high-level data sets and consist of <source>-<region>-<product>, which will be familiar if you’re in weather. If not, it’s more or less where the data comes from, what area of the world it covers, and the product.

The product can be something like “sfcf”, meaning surface, or “posh” if you’re a Victoria Beckham fan.

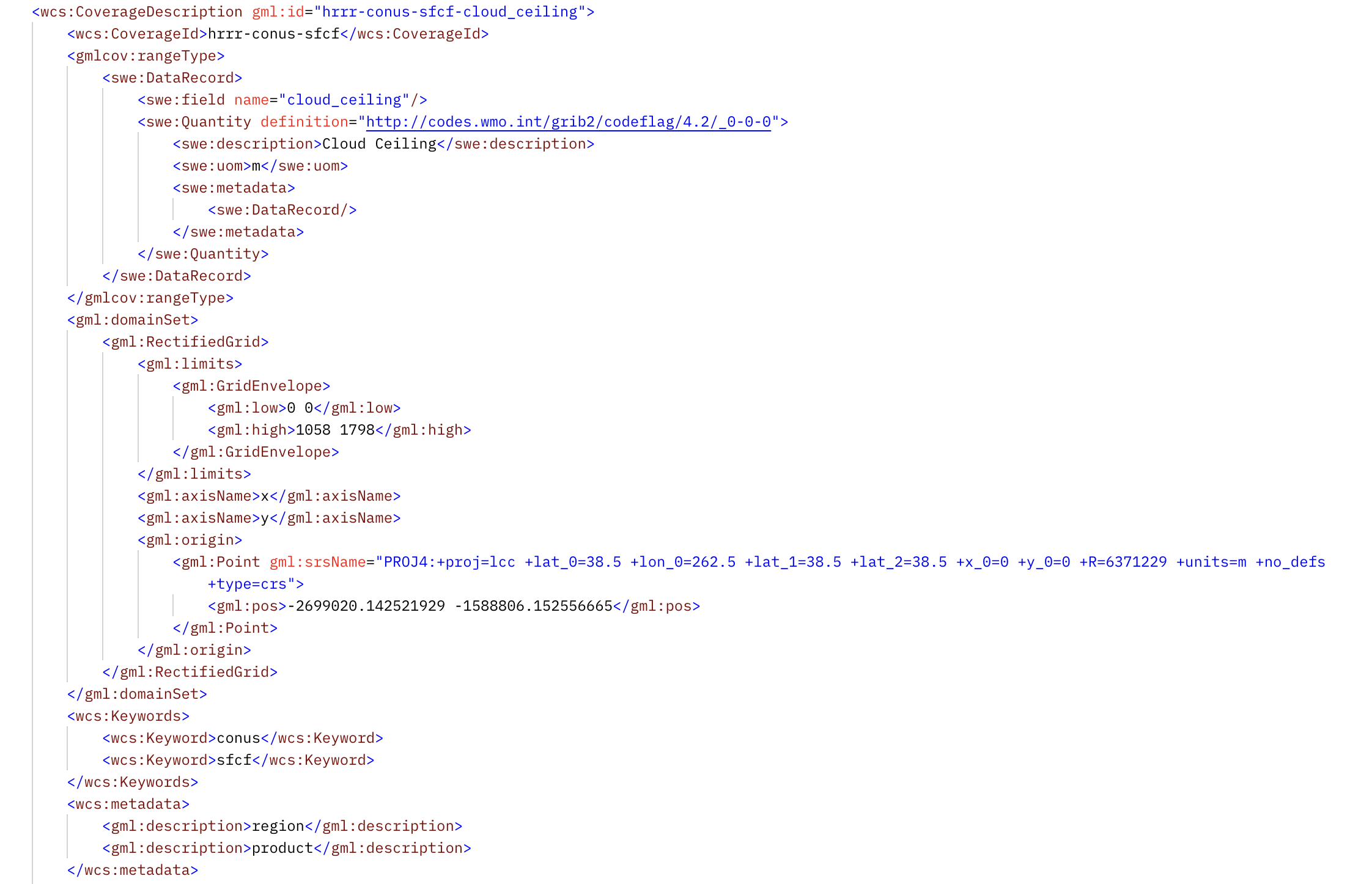

WCS Describe Coverage

With this coverage ID, we can get details on what’s in that data set and how it’s structured. For a big grid, this includes the grid size, what coordinate system it’s in, and where it is in the world.

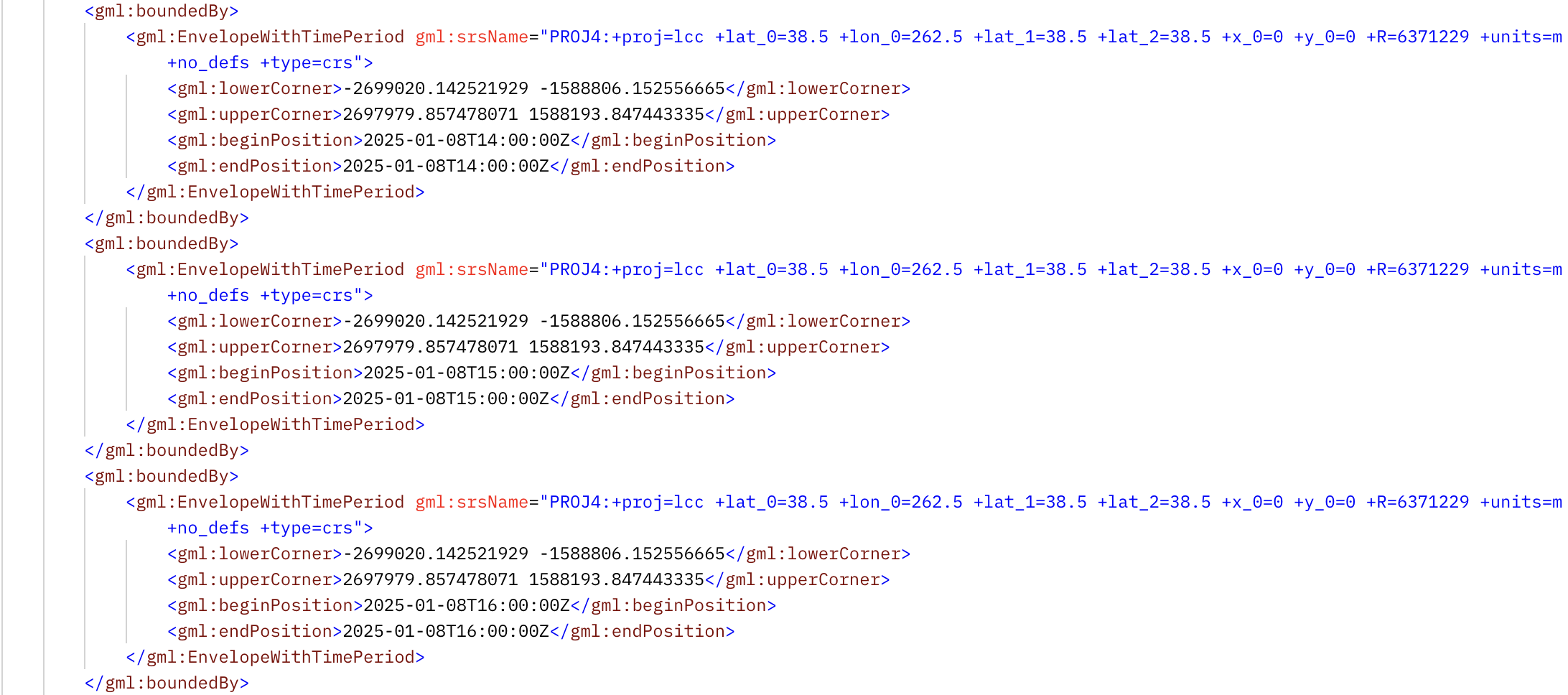

All good stuff, but we’re dealing with weather data, so where are the time slices?

We list them out individually. Is this a simple way to do it? Yes. Is it bloated and inefficient? Also yes. We may revisit that with some WCS extensions.

But hey, that return is easy to parse. You’ve got your extents and time slices, so now you can get to the data.

WCW Get Coverage

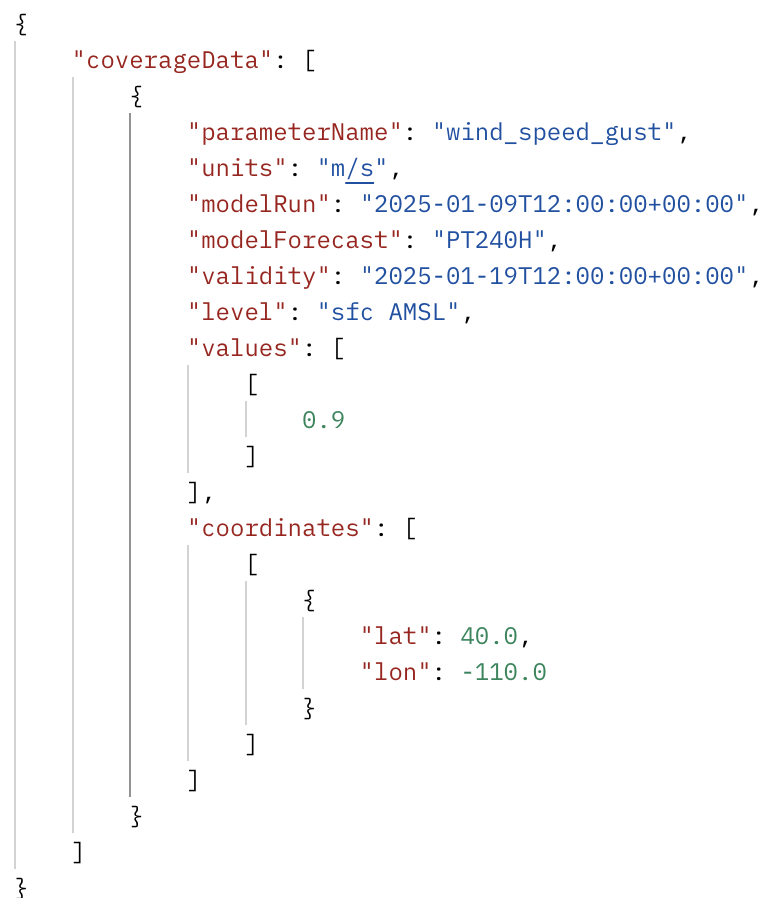

GetCoverage is when we get to the real data. Here, we’re asking for wind gusts at a particular point.

You can do grids and polygons, too. I hate how wordy this is, but for regular use, it’s fine. If our customers want to grab serious volumes of data, that’s Labrador.

Cloud-Based WCS Architecture

We’re not the first to implement a cloud-based WCS server, but our approach has some unique advantages. We use Zarr to store time slices on Amazon’s S3 and DynamoDB for metadata management. The actual WCS server runs as a container on Fargate, allowing seamless scaling.

When a customer needs more WCS servers, we increase the number in one place. Spinup is quick, as the service builds the cache as it goes, and our data is structured accordingly.

You could build an amazing multi-dimensional gridded database service, and there are several, but that’s not what our customers really need. This approach serves Sporadic, sparse access nicely, and if you need to do something more hard-core, there’s Labrador.